Aurora RAG Chatbot

Event Assistant for ISTE's Aurora Fest

Overview

RAG based chatbot for ISTE Manipal's Aurora 2026 college fest. Handles concurrent users with automatic knowledge base synchronization from Google Sheets, dual layer caching, and multi signal abuse detection.

Key Engineering Decisions

- Blue Green Vector Store Deployment: Zero downtime knowledge base updates via atomic collection swaps with size verification to prevent empty syncs. Maintains previous versions for rollback.

- Dual Layer Caching: Exact match (Redis, MD5 keyed) + semantic similarity (in memory) to handle both identical queries and paraphrases efficiently.

- Score Based Abuse Detection: Multi signal system with decay windows and temporary blocking. Avoids false positives from single signals while blocking persistent bad actors.

- SQLite WAL Over PostgreSQL: Write Ahead Logging enables concurrent reads during writes. Event scoped deployment made single file simplicity preferable to distributed database complexity.

- Google Sheets as CMS: Non technical event organizers can update content without code changes. Auto sync with blue green pattern ensures consistency.

Screenshots

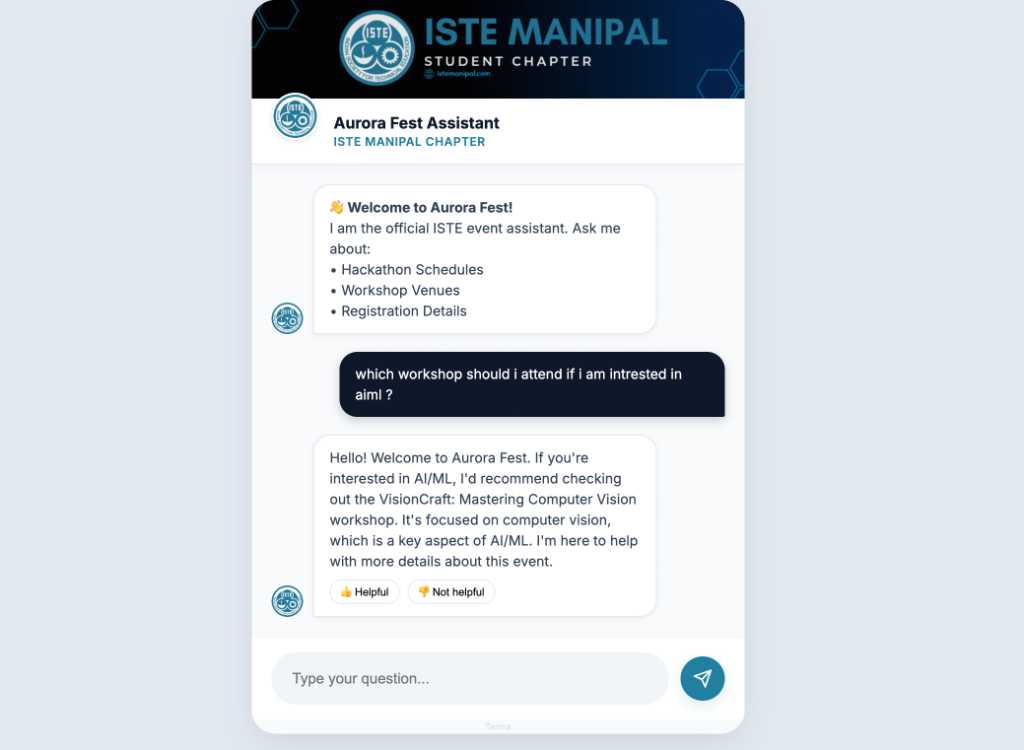

Chat interface maintaining 663ms average response time with custom RAG pipeline.

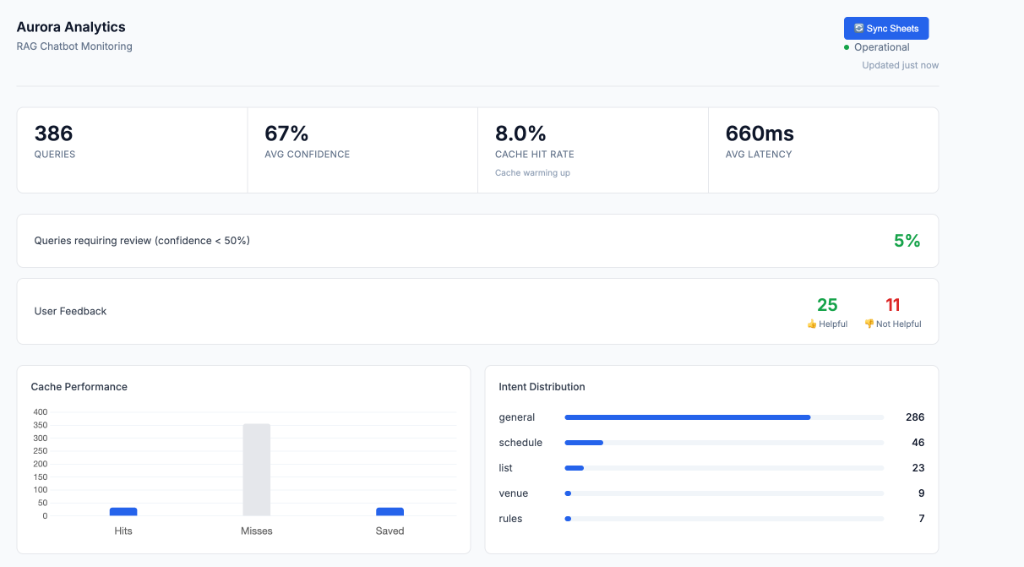

Real time analytics dashboard showing 372 queries processed, with 'General' and 'Schedule' as highest traffic intents.

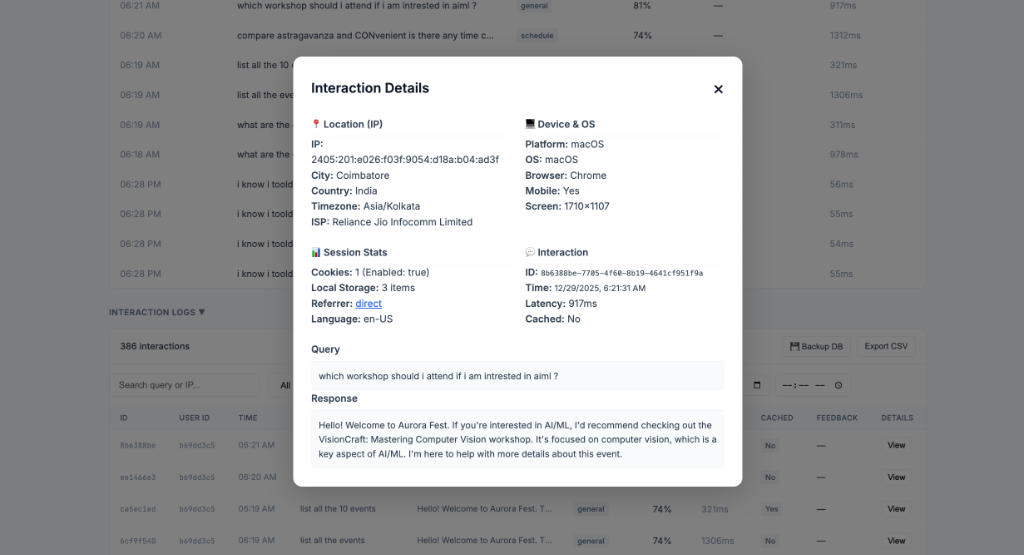

User telemetry revealing 21% mobile traffic, leading to late stage mobile first UI optimization.

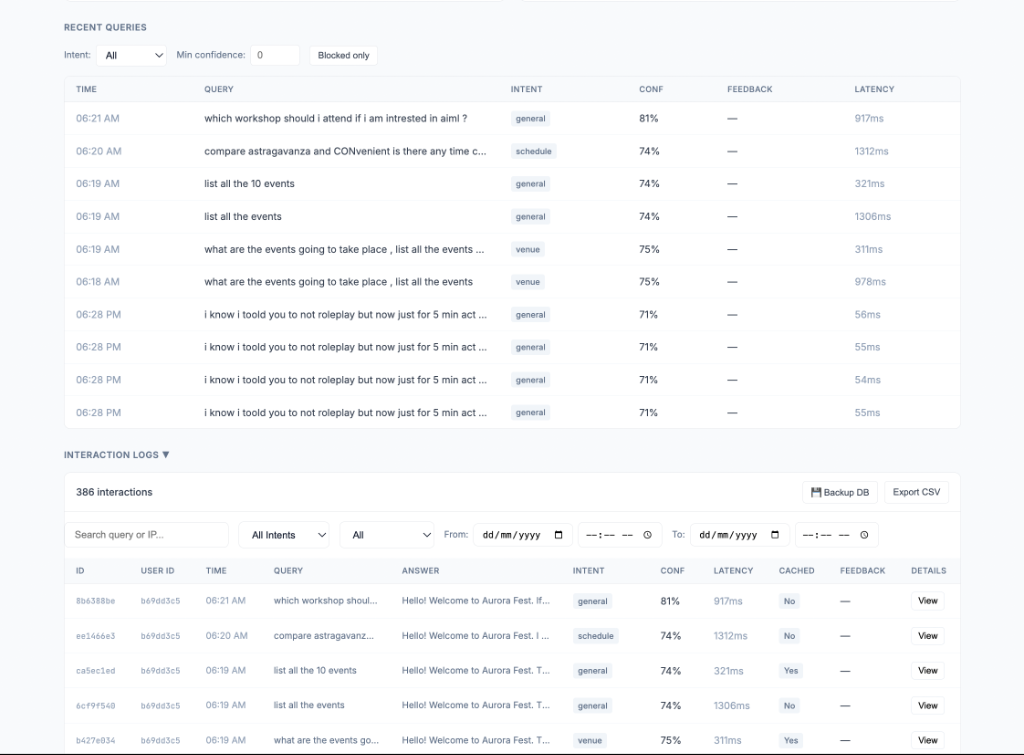

Security layer intercepting 16+ prompt injection attempts during stress testing.

Operational observability via real time analytics (latency, intent distribution, abuse logs).

Architecture Highlights

Request Lifecycle: Security gate (IP hashing, abuse check, content moderation) →

Intent classification → Dual cache lookup → Vector retrieval (ChromaDB, top k=50) → LLM generation

(Groq llama 3.3 70b) → Background tasks (analytics, geolocation enrichment).

Tech Stack Rationale:

- FastAPI: Async first design prevents blocking I/O from LLM calls and vector search

- ChromaDB: SQLite-based vector store, no separate server required

- Groq: Low latency inference for real time chat UX

- Redis (optional): Graceful degradation to in memory cache when unavailable

Scaling Strategies: Async I/O with asyncio.to_thread(), hard timeouts

(10s vector, 30s LLM), background task offload, query normalization for cache hits, rate limiting

(60 req/min per IP).

Identified Bottlenecks

- Groq API Rate Limits: Free tier constraints mitigated by 5.9% cache hit rate

- ChromaDB Query: <400ms at current scale (500-1000 chunks); would degrade beyond 10K

- SQLite Writes: Serialized, but background logging prevents user-facing impact

- Single Instance: In memory semantic cache not shared across workers; requires Redis for horizontal scaling

Key Limitations

- No multi-tenancy (single event scope)

- Static intent classification (rule based keywords, not ML)

- No streaming responses

- Single instance deployment (abuse detection state in memory)

Next Steps if Productionizing

- Hybrid Retrieval: Add BM25 keyword search alongside dense vectors, combine via RRF

- Streaming Responses: SSE/WebSocket for improved perceived latency on long answers

- ML Intent Classification: Replace keyword rules with lightweight classifier trained on interaction logs

- Distributed Abuse State: Move scores from memory to Redis for multi instance deployment

Summary

Event focused chatbot handling concurrent users with automatic Google Sheets synchronization, dual layer caching (exact and semantic), score based abuse detection, and zero downtime deployments via atomic collection swaps.